Sidekiq as a Microservice Message Queue

In the recent series on transitioning to microservices, I detailed a path to move a large legacy Rails monolith to a cluster of a dozen microservices. But not everyone starts out with a legacy monolith. In fact, given Rails popularity amongst startups, it’s likely most Rails applications don’t live to see 4+ years in production. So what if we don’t have a huge monolith on our hands? Are microservices still out of the question?

Sadly, the answer is, “it depends”. The “depends” part is specific to your context. While microservices may seem like the right move for you and your application, it’s also possible it could cause a mess if not done carefully.

This post will explore opportunities for splitting out unique microservices using Sidekiq, without introducing an enterprise message broker like RabbitMQ or Apache Kafka.

When are Microservices right?

Martin Fowler wrote about trade-offs that come when introducing microservices.

The article outlines 6 pros and cons introduced when you moved a microservices-based architecture. The strongest argument for microservices is the strengthening of module boundaries.

Module boundaries are naturally strengthened when we’re forced to move code to another codebase. The result being, in most cases a group of microservices appears to be better constructed than the legacy monolith it was extracted from.

There’s no doubt Rails allows developers to get something up and running very quickly. Sadly, you can do so while making a big mess at the same time. It’s worth noting there’s nothing stopping a monolith from being well constructed. With some discipline, your monolith can be the bright and shiny beauty that DHH wants it to be.

Sidekiq Queues

Ok, ok. You get it. Microservices can be awesome, but they can also make a big mess. I want to tell you about how I recently avoided a big mess without going “all in”.

There’s no hiding I’m a huge Sidekiq fan. It’s my goto solution for background processing.

Sidekiq has the notion of named queues for both jobs and workers. This is great from the standpoint that it allows you to put that unimportant long-running job in a different queue without delayed other important fast-running jobs.

A typical worker might look like:

class ImportantWorker

include Sidekiq::Worker

def perform(id)

# Do the important stuff

end

endIf we want to send this job to a different queue, we’d add sidekiq_options queue: :important to the worker, resulting in:

class ImportantWorker

include Sidekiq::Worker

sidekiq_options queue: :important

def perform(id)

# Do the important stuff

end

endNow, we need to make sure the worker process that’s running the jobs knows to process jobs off this queue. A typical worker might be invoked with:

bin/sidekiqSince new jobs going through this worker will end up on the important queue, we want to make sure the worker is processing jobs from the important queue too:

bin/sidekiq -q important -q defaultNote: Jobs that don’t specify a queue will go to the default queue. We have to include the default queue when we using the -q option, otherwise the default queue will be ignored in favor of the queue passed to the -q option.

The best part, you don’t even have to have multiple worker processes to process jobs from multiple queues. Furthermore, the important queue can be checked twice as often as the default queue:

bin/sidekiq -q important,2 -q defaultThis flexibility of where jobs are enqueued and how they’re processed gives us an incredible amount of freedom when building our applications.

Extracting Worker to a Microservice

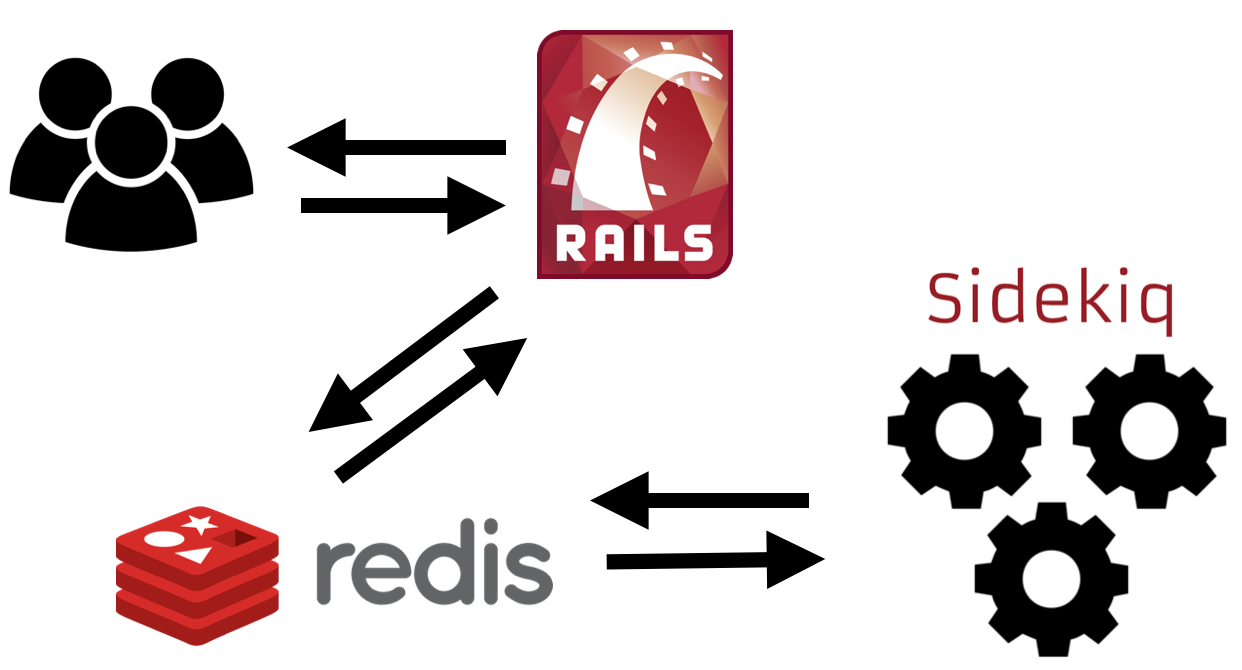

Let’s assume that we’ve deployed your main application to Heroku. The application uses Sidekiq and we’ve included a Redis add-on. With the addition of the add-on, our application now has a REDIS_URL environment variable that Sidekiq connects to on startup. We have a web process, and worker process. A pretty standard Rails stack:

What’s stopping us from using that same REDIS_URL in another application?

Nothing, actually. And if we consider what we know about the isolation of jobs in queue and workers working on specific queues, there’s nothing stopping us from having workers for a specific queue in a different application altogether.

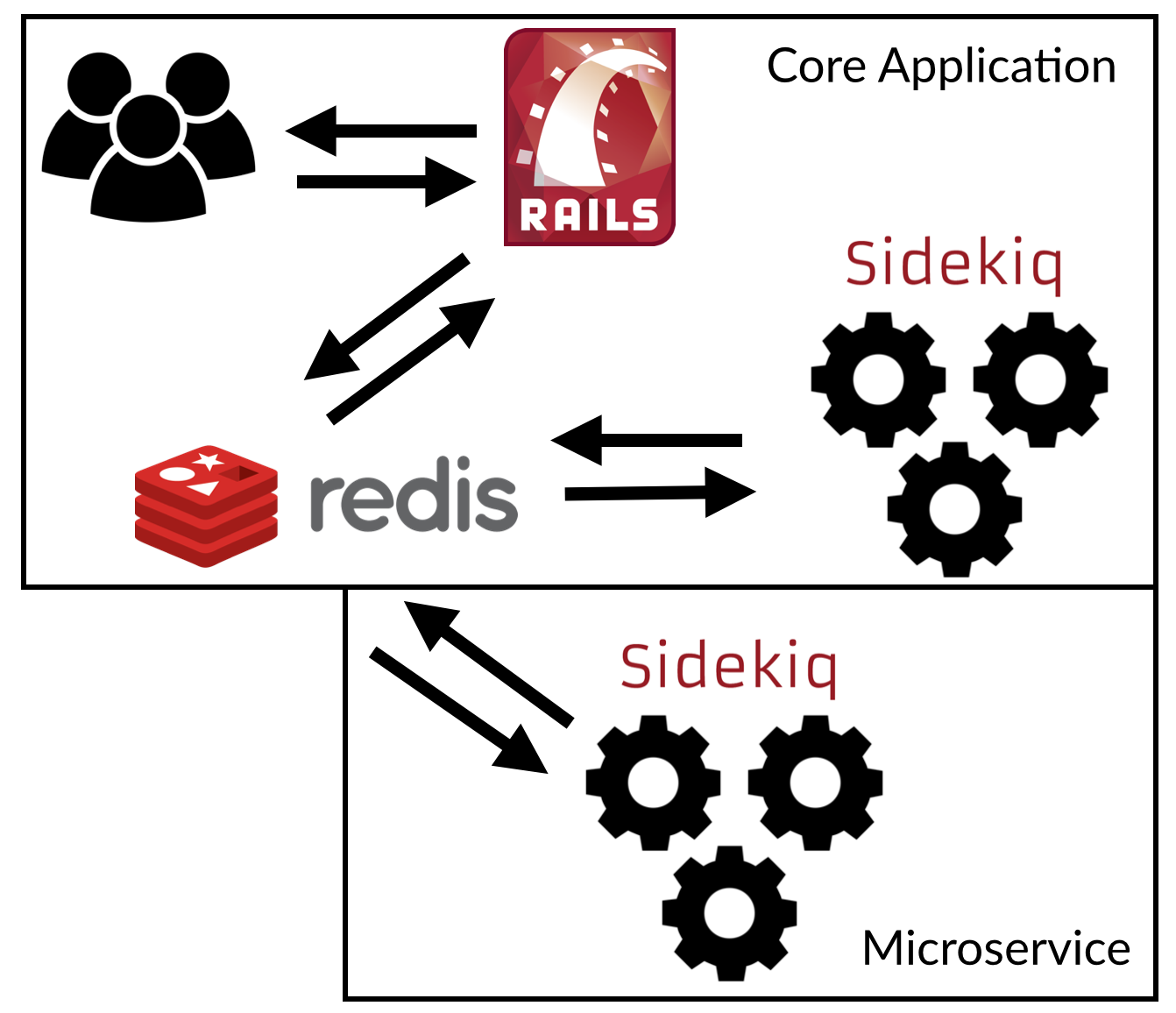

Remember ImportantWorker, imagine the logic for that job was better left for a different application. We’ll leave that part a little hand-wavey because there still should be a really good reason to do so. But we’ll assume you’ve thought long and hard about this and decided the core application was not a great place for this job logic.

Extracting the worker a separate application might now look something like this:

Enqueueing Jobs with the Sidekiq Client

Typically, to enqueue the ImportantWorker above, we’d call the following from our application:

ImportantWorker.perform_async(1)This works great when ImportantWorker is defined in our application. With the expanded stack above, ImportantWorker now lives in a new microservice, which means we don’t have access to the ImportantWorker class from within the application. We could define it in the application just so we can enqueue it, with the intent that the application won’t process jobs for that worker, but that feels funny to me.

Rather, we can turn to the underlying Sidekiq client API to enqueue the job instead:

Sidekiq::Client.push(

"class" => "ImportantWorker",

"queue" => "important",

"args" => [1]

)

Note: We have to be sure to define the class as a string "ImportantWorker", otherwise we’ll get an exception during enqueuing because the worker isn’t defined in the application.

Processing Sidekiq Jobs from a Microservice

Now we’re pushing jobs to the important queue, but have nothing in our application to process them. In fact, our worker process isn’t even looking at that queue:

bin/sidekiq -q defaultFrom our microservice, we setup a worker process to ONLY look at the important queue:

bin/sidekiq -q importantWe define the ImportantWorker in our microservice:

class ImportantWorker

include Sidekiq::Worker

sidekiq_options queue: :important

def perform(id)

# Do the important stuff

end

endAnd now when the worker picks jobs out of the important queue, it’ll process them using the ImportantWorker defined above in our microservice.

If we wanted to go one step further, the microservice could then enqueue a job using the Sidekiq client API to a queue that only the core application is working on in order to send communication back the other direction.

Summary

Any architectural decision has risks. Microservices are no exception. Microservices can be easier than an enterprise message broker, cluster of new servers and a handful of devops headaches.

I originally dubbed this the “poor man’s message bus”. With more thought, there’s nothing “poor” about this. Sidekiq has a been a reliable piece of our infrastructure and I have no reason to believe that’ll change, even if we are using it for more than just simple background processing from a single application.