Monitoring Sidekiq Using AWS Lambda and Slack

It’s no mystery I’m a Sidekiq fan – my background job processing library of choice for any non-trivial applications. My favorite feature of Sidekiq has to be retries. By default, failed jobs will retry 25 times over the course of 21 days.

As a remote company, we use Slack to stay in touch with everyone AND to manage/monitor our infrastructure (hello #chatops). We can deploy from Slack (we don’t generally, we have full CI) and be notified of infrastructure and application errors.

When Sidekiq retries accumulate, it’s a good indication that something more severe might be wrong. Rather than get an email we won’t see for 30 minutes, we decided to integrate these notifications in to Slack. In doing so, we found AWS Lambda to be a lightweight solution to tie the monitoring of Sidekiq and notifications in Slack together.

The Problem

Bark is background job-heavy. The web application is a glorified CRUD app that sets up the data needed to poll a child’s social media feed and monitor for potential issues. The best-case scenario for a parent is that they will never hear from us.

Because Bark’s background jobs commonly interact with 3rd-party APIs, failures aren’t a big surprise. APIs can be down, network connections can fail – Sidekiq’s retry logic protects us from transient network errors. Under normal circumstances, jobs retry and ultimately run successfully after subsequent attempts. These are non-issues and something we don’t need an engineer to investigate.

There are times when retries accumulate, giving us a strong indication that something more severe may be wrong. Initially, we setup New Relic to notify us of an increased error rate. This worked for simple cases, but was sometimes a false positive. As a result, we started to ignore them, which potentially masked more important issues.

We soon realized one of the gauges of application health was the number of retries in the Sidekiq queue. We have the Sidekiq Web UI mounted within our admin application, so we’d browse there a few times a day to make sure the number of retries weren’t outside our expectations (in this case < 50 were acceptable).

This wasn’t a great use of our time. Ideally, we wanted a Slack notification when the number of Sidekiq retries was > 50.

The Solution

Because Bark is on AWS, we naturally looked to their tools for assistance. In this case, we needed something that would poll Sidekiq, check the number of retries, and POST to Slack if the number of retries was > 50.

There were a few options:

- Add the Sidekiq polling and Slack notification logic to our main application and setup a Cron job

- Create a new satellite application that ONLY does the above (microservices???)

- Setup an AWS Lambda function to handle the above logic

The first two options would’ve worked, but I was hesistant to add complexity to our main application. I was also hesitant to have to manage another application (ie. updates, etc.) for something that seemed simple.

Option “AWS Lambda” won! Let’s take a look at the implementation.

Sidekiq Queue Data Endpoint

First, we need to expose the number of Sideki retries somehow. As I mentioned above, the Sidekiq web UI is mounted in our admin application, but behind an authentication layer that would’ve been non-trivial to publicly expose.

Instead, we created a new Rails route to respond with some basic details about the Sidekiq system.

require 'sidekiq/api'

class SidekiqQueuesController < ApplicationController

skip_before_action :require_authentication

def index

base_stats = Sidekiq::Stats.new

stats = {

enqueued: base_stats.enqueued,

queues: base_stats.queues,

busy: Sidekiq::Workers.new.size,

retries: base_stats.retry_size

}

render json: stats

end

endalong with the route:

resources :sidekiq_queues, only: [:index]As you can see, the endpoint is public (there’s no job args or names exposed – just counts). The code digs in to the Sidekiq API to interrogate the size of queues.

Slack Incoming WebHook

We want to be able to POST to Slack when the number of Sidekiq retries are > 50. To do this, we’ll setup a custom incoming webhook integration in Slack.

We’ll start by choose Apps & integrations from within the main Slack options. From here, choose Manage in the top right, and then Custom Integrations on the left. You’ll have 2 options:

- Incoming WebHooks

- Slash Commands

We’ll choose Incoming Webhooks and choose Add Configuration to add a new one. From here, we’ll supply the information needed to specify the channel where the notifications will appear and how they look.

The most important of this step is to get the Webhook URL. This will be the URL we POST to from within our Lambda function when retries are above our acceptable threshold.

AWS Lambda Function

Now that we have our endpoint to expose the number of retries (among other things) and the Slack webhook URL to POST to, we need to setup the AWS Lambda function to tie to the two together. We’ll start by creating a new Lambda function with the defaults – using the latest Node.

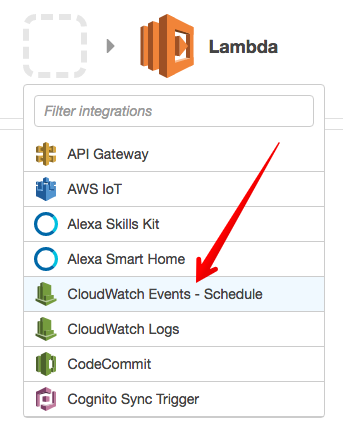

For the trigger, we’ll use “CloudWatch Events - Schedule”:

From here, we’ll enter a name and description for our rule and define its rate (I chose every 5 minutes). Enable the trigger and we’ll move to defining our code. Next, we’ll give the function a name and choose the latest NodeJS as the runtime. Within the inline editor, we’ll use the following code:

var AWS = require('aws-sdk');

var url = require('url');

var https = require('https');

var sidekiqURL, hookUrl, slackChannel, retryThreshold;

sidekiqUrl = '[Sidekiq queue JSON endpoint]'

hookUrl = '[Slack Incoming WebHooks URL w/ token]';

slackChannel = '#operations'; // Enter the Slack channel to send a message to

retryThreshold = 50;

var postMessageToSlack = function(message, callback) {

var body = JSON.stringify(message);

var options = url.parse(hookUrl);

options.method = 'POST';

options.headers = {

'Content-Type': 'application/json',

'Content-Length': Buffer.byteLength(body),

};

var postReq = https.request(options, function(res) {

var chunks = [];

res.setEncoding('utf8');

res.on('data', function(chunk) {

return chunks.push(chunk);

});

res.on('end', function() {

var body = chunks.join('');

if (callback) {

callback({

body: body,

statusCode: res.statusCode,

statusMessage: res.statusMessage

});

}

});

return res;

});

postReq.write(body);

postReq.end();

};

var getQueueStats = function(callback) {

var options = url.parse(sidekiqUrl);

options.headers = {

'Accept': 'application/json',

};

var getReq = https.request(options, function(res){

var body = '';

res.setEncoding('utf8');

//another chunk of data has been recieved, so append it to `str`

res.on('data', function (chunk) {

body += chunk;

});

//the whole response has been recieved, so we just print it out here

res.on('end', function () {

if (callback) {

callback({

body: JSON.parse(body),

statusCode: res.statusCode,

statusMessage: res.statusMessage

});

}

});

})

getReq.end();

}

var processEvent = function(event, context) {

getQueueStats(function(stats){

console.log('STATS: ', stats.body);

var retries = stats.body.retries;

if (retries > retryThreshold) {

var slackMessage = {

channel: slackChannel,

text: "www Sidekiq retries - " + retries

};

postMessageToSlack(slackMessage, function(response) {

if (response.statusCode < 400) {

console.info('Message posted successfully');

context.succeed();

} else if (response.statusCode < 500) {

console.error("Error posting message to Slack API: " + response.statusCode + " - " + response.statusMessage);

context.succeed(); // Don't retry because the error is due to a problem with the request

} else {

// Let Lambda retry

context.fail("Server error when processing message: " + response.statusCode + " - " + response.statusMessage);

}

});

} else {

console.info('Sidekiq retries were ' + retries + ' . Below threshold.');

context.succeed();

}

})

};

exports.handler = function(event, context) {

processEvent(event, context);

};Note: sidekiqURL and hookURL need to be defined with appropriate values for this to work.

Review and save the Lambda function and we’re all set!

Review

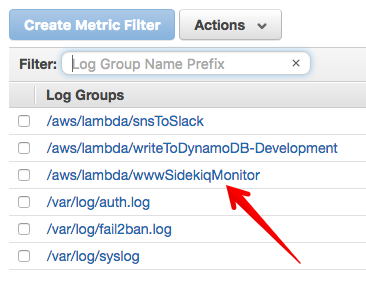

We can review the Lambda function logs on CloudWatch. Go to CloudWatch and choose “Logs” from the left menu. From here, we’ll click the link to the name of our Lambda function:

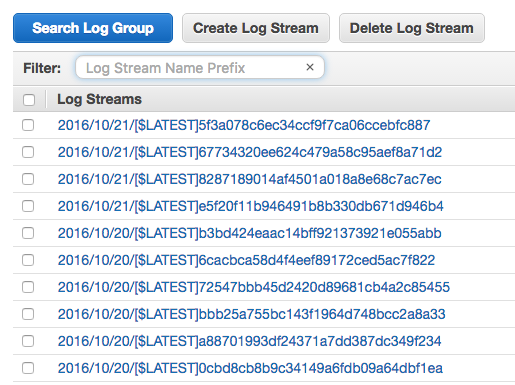

From here, logs for each invocation of the Lambda function will be grouped in to a log stream:

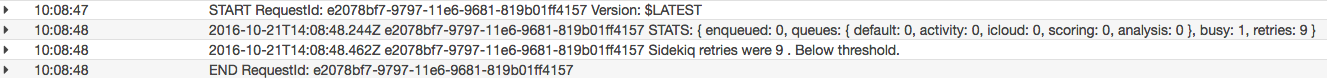

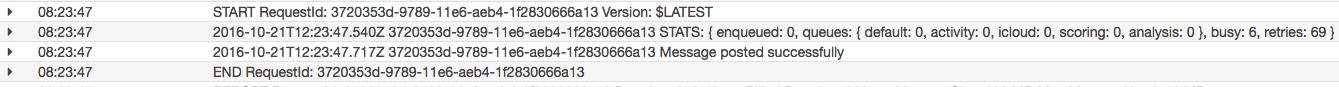

Grouped by time, each link will contain multiple invocations. A single execution is wrapped with a START and END, as shown in the logs. Messages in between will be calls to console.log from within our function. We logged the results of the Sidekiq queue poll for debugging purposes, so you can see that below:

This was invocation where the number of retries were < 50, and a result, didn’t need to POST to Slack. Let’s take a look at the opposite:

We can see the Message posted successfully log indicating our message was successfully sent to Slack’s incoming webhook.

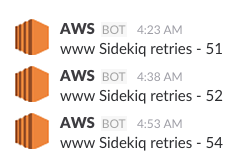

Finally, here’s what the resulting message looks like in Slack when the number of Sidekiq retries are above our threshold:

Conclusion

Using new tools is fun, but not when it brings operational complexity. I’ve personally found AWS lamba to be a great place for endpoints/functionality that feels cumbersome to include in my applications. Bringing these notifications in to Slack has been a big win for our team. We took a previously untrustworthy notification (NewRelic error rate) and brought some clarity to the state and health of our applications.